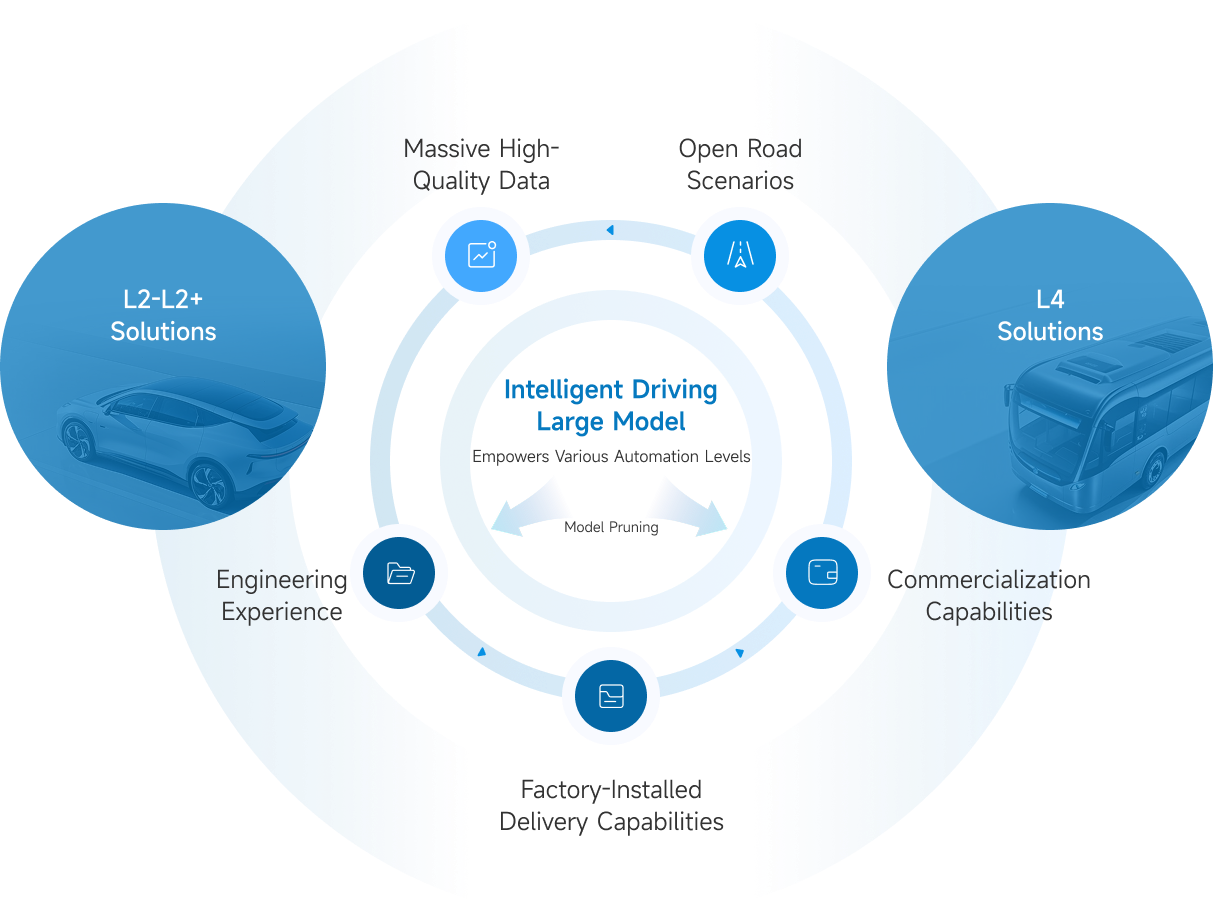

Utilizing model pruning, modular approach and a dual-track approach to flexibly adapt algorithm models

and ensure smooth transition between L4 and L2.

In software development, we leverage the technology stack, development platform and Tool chains built for our L2-L2+ solutions to significantly enhance R&D efficiency of L4 solutions while reducing development costs. AI models for both L2-L2+ and L4 solutions are built upon a unified foundational model, which is optimized and customized based on application scenarios and performance requirements through technologies such as model pruning, quantization and distillation to ensure consistency and efficiency across technology development.

We have accumulated abundant AI technology and engineering experiences through extensive deliveries for L2-L2+ mass-production projects. The reliability and stability of our L2-L2+ solutions have been fully validated through mass-production, which provides a replicable engineering foundation for the development and deployment of our L4 systems.

On the hardware side, by reusing sensors and domain controllers adopted in our L2-L2+ solutions, we not only ensure the reliability of hardware, but also reduce costs of our L4 solutions leveraging cost advantages of mass-production supply chain. In addition, our experience in algorithm optimization at the L2-L2+ level enables us to optimize sensor layout and chip selection for our L4 solutions. For instance, the sensor layout of our Robotaxi solution, ConnectOne, adopts fewer LiDARs and primarily utilizes Horizon Robotics’ Journey 6 processing hardware with mid-range computing power, which significantly improves the cost-efficiency of our L4 solutions.

As we scale up the deployment of our L4 solutions, we can also accumulate a massive amount of high-quality data in real-world operating scenarios. Such high-quality data from L4 vehicles can be fed back to optimize our models for L2-L2+ solutions. After anonymization and cleansing, the ground-truth data eliminate biases inherent in human driving behaviors, which can accelerate our product iteration cycle and reduce data collection costs compared with traditional methods — data collection by human drivers.

Leveraging our strong software-and-hardware co-development capabilities, we have developed a unified heterogeneous computing architecture adaptable to multiple SoC platforms to enable deep integration of AI algorithm models with various hardware (such as computing chips and sensors). Our system architecture adopts a unified platform interface and data protocols, and supports the rapid deployment of intelligent driving systems across different SoC platforms through an automated model conversion tool. We also unify and standardize external interfaces through a layered and decoupled system architecture. This allows us to effectively break the barriers between different hardware to ensure consistent, high performance of our systems across SoC platforms, enabling generalization and reusability of software and supporting rapid cross-platform deployment and validation of intelligent driving systems.

Rapid Adaptation to New Rules and Regions

Comprehensive coverage of diverse edge cases

Advanced object recognition and joint reasoning

and behavior understanding

Reduce reliance on large-scale real-world data

We have built up a VLA/VLM architecture through deep integration of end-to-end methodologies and large language models that enables advanced object recognition, accurate prediction of traffic participants’ behavioral intentions and comprehensive environment understanding. Our natural-language-driven trajectory generator strengthens the explainability of our AI systems, thereby enhancing trust from both users and regulators. Our systems achieve exceptional robustness leveraging multimodal fusion and redundant safety mechanism. Our system architecture can compensate for limitations of standalone end-to-end system in extreme conditions, reducing false detections, and optimizing our ability to address long-tail issues through an AI model with high generalization ability. We adopt the idea of developing light-weight AI models with enhanced reasoning efficiency. We compress model parameters by applying model pruning, quantization and distillation technologies. Based on our deep understanding of various SoC platforms, we have also implemented a multi-core parallel scheduling mechanism that maximizes the utilization of heterogeneous computing power under a CPU-NPU collaborative SoC architecture.

end-to-end · map-free · spot-to-spot

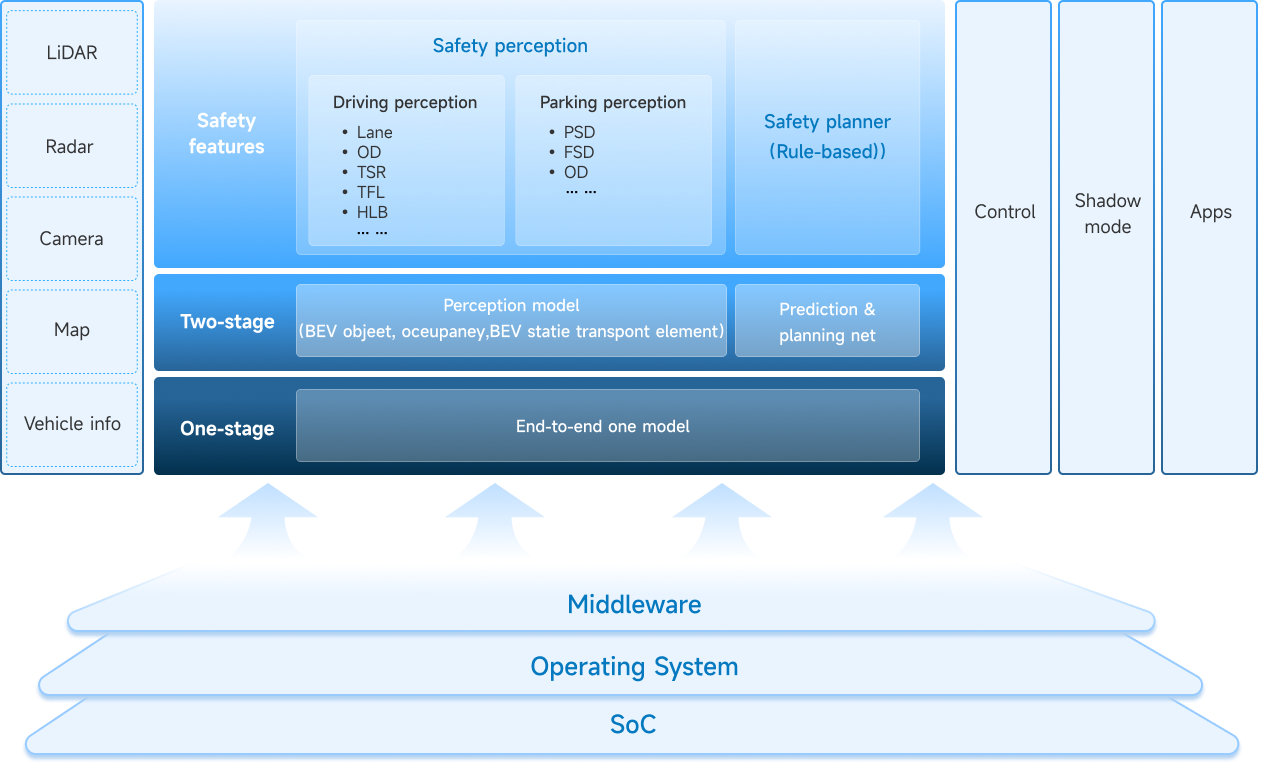

CalmDrive 1.0 forms a series of stable and reliable intelligent driving solutions by integrating multiple AI perception algorithm models, post-perception fusion models, and rule-based planning modules and control modules. It features clear functional modules and strong explainability, but requires rule design and fallback strategies for various scenarios. The interdependencies between different modules are complex, and the system’s ability to handle long-tail corner cases is limited. This was the mainstream technical approach at the early stage of the intelligent driving industry.

CalmDrive 2.0 adopts a BEV-based pre-fusion perception algorithm to integrate visual and point-cloud data from different sensors, and combines rule-based planning strategy and model-based planner into one planning module to address cross-modal spatial matching challenges. The system enhances temporal modeling capability, reduces missed and false detections, and significantly improves adaptability and reliability under complex scenarios. It has become a mainstream technology architecture for mass-production projects in recent years.

CalmDrive 2.5 adopts a two-stage end-to-end architecture composed of a perception module and a decision/planning module. The system leverages large models to power the perception module for precise environmental understanding, outputs the perception results to model-based planning module, and outputs control commands. It ensures seamless connections between perception, decision-making and planning, substantially simplifies system complexity, optimizes information flow, minimizes information loss between models, and improves overall system efficiency and accuracy.

CalmDrive 3.0 is a one-stage end-to-end system integrating perception and planning. Leveraging a large model, the system starts from processing inputs of sensor data, and outputs control commands. This architecture further simplifies system structure, enhances efficiency of model execution and generalization capability, and improves system integration and operationality. In addition, we have further enhanced the system’s explainability through VLM/VLA architecture.

Multimodal fusion drives proactive planning and decision-making for human-like, scenario-adaptive driving.

Learn moreThe system mimics the behavior of experienced human drivers leveraging reinforcement learning(RL) and contrastive imitation learning(CIL), delivering driving behaviors that are safe, smooth and efficient.The powerful decision model architecture boosts the autonomous driving system's cognitive intelligence and enhances performance in complex scenarios.

Data Collection · Data Management · Data Upload

Learn more >Model Conversioa Tool · Automated Performance Evaluation · Software Library

Learn more >Algorithm Assembly · System Deployment · Verifcation and Validation

Learn more >We have integrated multimodal AI large models into CalmVolution, which helps us to conduct analysis, breakdown and review of customer requirements during the R&D and delivery process, and to accelerate code generation and make code quality assessment. This approach also helps us sort out the traceability among requirements, development, and verification and validation, which further improves our systematic error-correction capability.

Our data closed-loop subsystem, DataTurbo, was built on the philosophy of“full chain data closed-loop.”It has established a complete chain from data generation to value realization through deep coordination among three module—data collection, data management and data upload. It supports efficient processing of data—a core element of intelligent driving algorithm iteration and functional optimization.

Our model training and development platform, CalmForge, enables us to manage AI algorithm models at different stages throughout their lifecycle, including training, fine-tuning, evaluation and monitoring. With our optimization of training methodology, CalmForge enables independent training and rapid iteration of multiple models in parallel, which improves utilization of our AI training servers and reduces model training period by more than 30% compared to conventional approaches. In addition, the model conversion tool embedded in CalmForge automatically converts our AI models to adapt to various SoC platforms, which enhances our cross-platform compatibility while reducing R&D expenses.

Our automated system integration platform, CalmVergence, provides essential support to the rapid deployment of our intelligent driving systems by seamlessly connecting algorithms, software and hardware. Our R&D team can easily integrate our AI algorithm models and software from our software library with various vehicle interfaces simply by ticking checkboxes, which enables us to maximize reuse of our R&D outputs across projects. The reuse rate of our software and AI algorithm models in different projects exceeds 70%. We are able to complete deployment of a full set of systems on a new SoC platform within one month for verification and validation purpose, while it takes less than six months to complete the full cycle from deployment, verification and validation, to the final delivery of a mass-production project for a new vehicle model on a previously-deployed SoC platform.

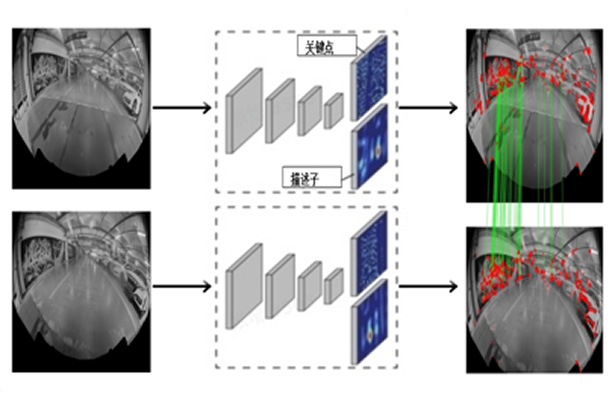

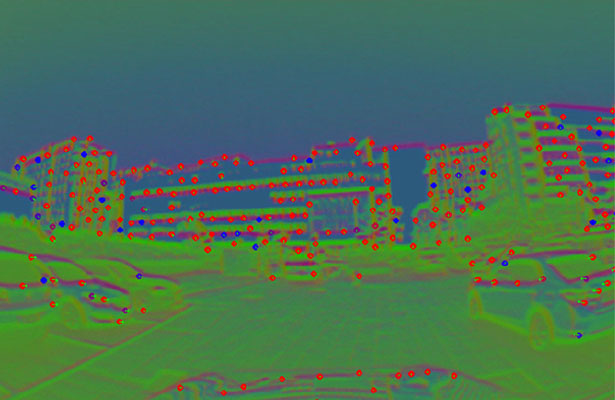

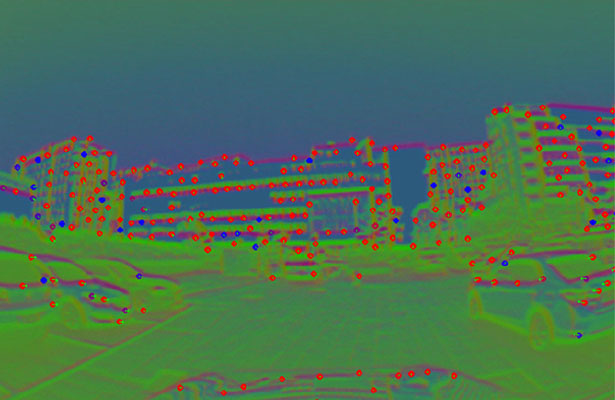

The neural network concurrently generates multi-dimensional data, including sparse feature point-cloud data, sparse semantic targets, and dense semantic targets. This integrated spatial map encompasses both ground and above-ground elements, addressing the issues of high computational redundancy and limited information density commonly found in traditional map extraction techniques.

By combining rich semantic data with sparse point cloud information, the system achieves accurate scene matching and localization. It effectively overcomes challenges such as dynamic lighting changes, low-texture environments, and repetitive scene textures, enabling reliable initial localization within parking structures at various levels of a memory map.

Featuring a multi-camera redundancy design, the system overcomes localization challenges in obstructed environments. It supports single-pass mapping and bidirectional cruise localization, providing precise and efficient solutions for various parking and retrieval scenarios.

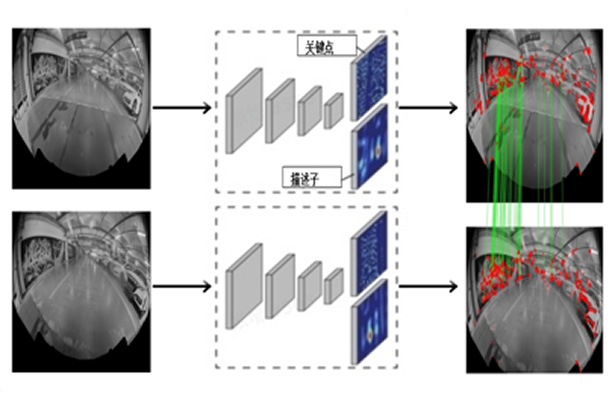

Convolutional Neural Network-Based Feature Extraction and Matching

神经网络特征示例

跨层泊车

To maximize the efficiency and performance of our AI development platform, we have developed a series of specialized toolchain products.

These tools further enhance the platform’s advantages in data processing, model training, and post-delivery support. Set forth below are selected examples of the toolchain products.

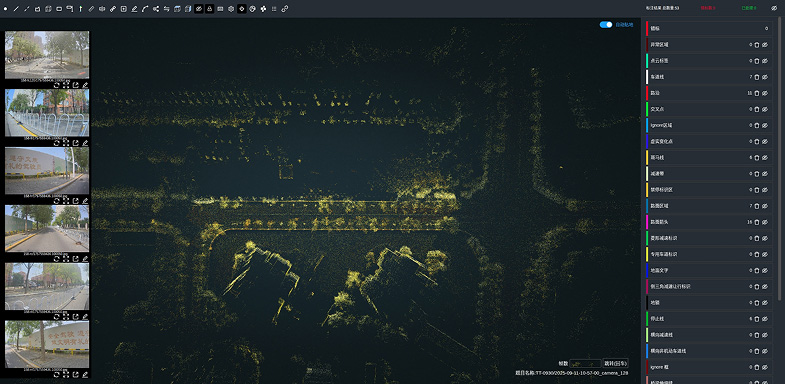

To better conduct end-to-end model training, we independently developed Fast4D, a proprietary 4D automatic data labelling tool. Building upon traditional 3D labelling, Fast4D introduces a temporal dimension to continuously and coherently track dynamic objects’ motion trajectories, status changes and interactions with their surroundings, ensuring spatiotemporal consistency in the dataset. Fast4D employs an enhanced 3D Gaussian Splatting technique, improving reconstruction efficiency by 16 times compared with traditional NeRF-based methods. The tool generates pre-annotated 4D data by fusing and processing multi-sensor data, and manually refine such data to produce high-quality datasets that can be used in AI model training. Fast4D increases our data labelling efficiency by approximately 120 times compared with traditional 2D/3D labelling methods.

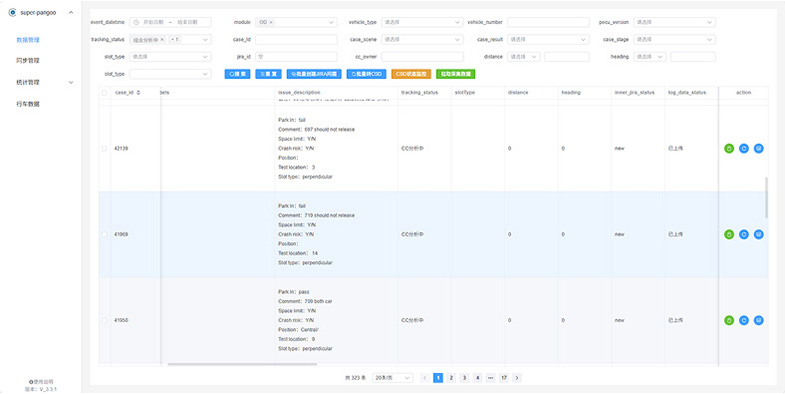

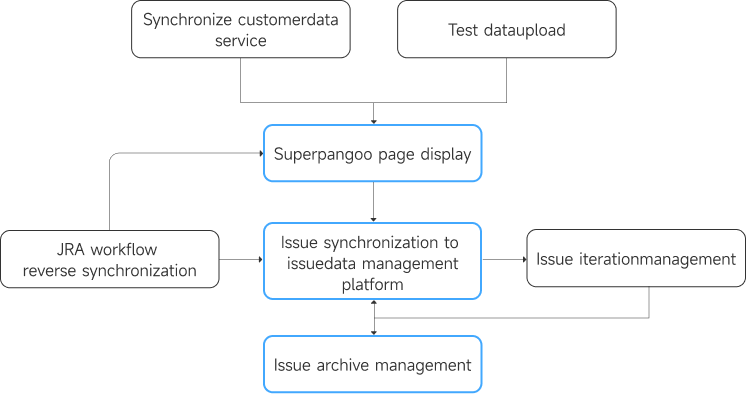

Learn moreTo better track the entire process of development and delivery and to provide customers with high-quality and timely support, we developed SuperPangoo, a closed-loop issue data management platform. The platform supports rapid logging, assignment, processing and archiving of issues, and can interface seamlessly with various mainstream workflow management systems used by our customers to ensure trackability and traceability of all customer issues both internally and externally. Through SuperPangoo, we synchronize issue resolution progress and plans in real time, automatically transmits key information back to customers’ internal management systems, and enables customers to stay informed of issue handling status. SuperPangoo significantly improves customer experience and satisfaction.

Learn more